My instance is quite slow in search (like several tens of seconds for single search. and sometimes 0 results found, until reloaded).

I noticed some of the other instances are quite swift in search. Do you have some recommendation regarding the speed of search?

My RAM is quite high (around 12GB), disks are in raid array, i use separate solr server and citation reference index.

also, do you have an idea how to debug the speed?

I have that when peer is under load. https://github.com/yacy/yacy_search_server/issues/656

Are they HDD or SSD?

hdd. too big index for ssd.

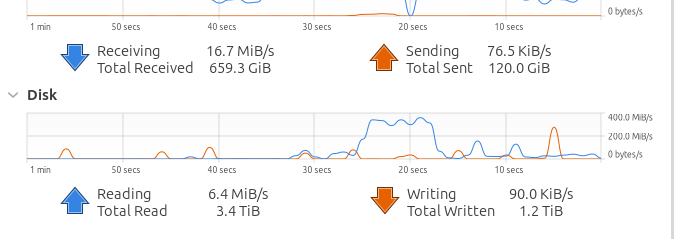

Ok Hdd. I did a search on my peer of ~50 Million and the disk read is nearly 400 MB’s on SSD Raid array.

Ubuntu 24.04.1

SSD not possible for me (althought i have quite fast raid), does anyone have some other speed optimisation tricks? Or everyone, who runs a fast instance, does have SSDs?

solr “Optimise” helped a bit.

I believe, there must be at least some software optimisations.

@roamn, I noticed, you’re using some non-standard Java options – did you find out something during your experiments or came to some conclusion?

Still an experiment really based on Chat GPT had to offer.

Here is what I am using now.

JAVA_ARGS="-server -Djava.awt.headless=true -Dfile.encoding=UTF-8";

JAVA_ARGS="-XX:+UseG1GC -XX:MaxGCPauseMillis=400 -XX:G1HeapRegionSize=64m -XX:MetaspaceSize=1024m -XX:MaxMetaspaceSize=2048m -Xss2048k $JAVA_ARGS";

Thanks!

How much RAM do you have? Is that tailored for your ammount of RAM?

Do you understand these settings, or is it just ‘GPT said’?

I’ve noticed you tried also some other JAVA_ARGS before. Which results did you get out of the experiment? Does these setting matter?

Or, is someone else using some others, with what results?

Looking at the top and processor usage, maybe my tightest bottleneck is solr. I use separate instance, not the bundled one, and considering now to use solr cloud. I also switched-off RWI search, since I don’t search the p2p network, only the local instance.

I have 384 GB of ram.

Just a guess really

Its just what chat GPT offered I might of increased them a bit though.

By reducing httpd session pool to 20 yacy gives error 503 to reduce its load on the system by too many sessions being used.

Ok, thanks! Maybe the java_args are worth of experimenting. I suppose, it’s mainly about memory usage.

Have anyone else tried some others?

that’s really a lot! my server is able to hold 10x less.