Hi,

would it be possible to implement a module in YaCy responsible for indexing IPFS? ipfs-search.com has been closed, and I am currently looking for a decentralized search engine that could take over this function. Would extending the capabilities of YaCy to include IPFS be possible, and what are your thoughts on this?

wäre es möglich, in YaCy ein Modul zu implementieren, das für das Indizieren von IPFS verantwortlich ist? ipfs-search.com wurde geschlossen, und ich suche derzeit eine dezentralisierte Suchmaschine, die diese Funktion übernehmen könnte. Wäre es möglich, die Fähigkeiten von YaCy um IPFS zu erweitern, und wie seht ihr das?

With kind regards / Mit freundlichen Gruessen

Konrad

1 Like

Hi Konrad,

thanks for your idea!

It’s interesting, calls to include Torrent and dark web, had take place recently. Probably the decentralised nature of YaCy is sexy now.

I have no idea about structure od ipfs. Does it ressemble web? Are there urls, hyperlinks and textual content? What steps do you think would be neccessary to include that? How would that work?

YaCy is not module-based, rather monolithic, so it’s not just about adding a module. But the P2P structure and DHT/RWI transfers would be definitely usable for that, IMHO.

As long as that is a proprietary format from a commercial product - no.

However, I do commercial consulting, if there is a customer I would be open for requests.

Hi,

thank you for your feedback.

To understand how IPFS works, you can watch this short video:

YT watch?v=PlvMGpQnqOM

It is essential to comprehend its functionality. As mentioned in my post, I am looking to restore the functionality of ipfs-search.com, and that’s why I’m exploring suitable solutions. A decentralized search engine system seems to be one of the options. I have had a discussion on this topic with Mathijs from ipfs-search.com, who is one of the authors of that page. IPFS operates differently from the standard internet; you work more or less with CIDs.

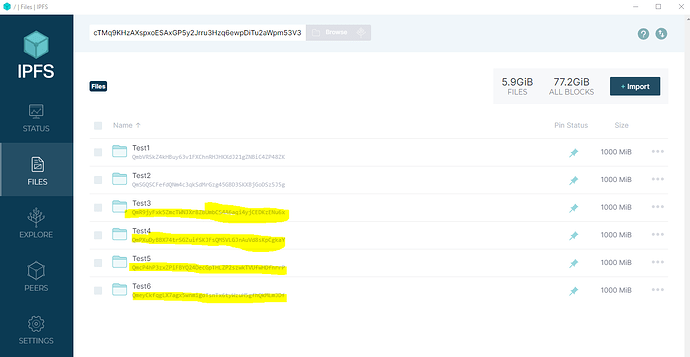

IPFS is like a virtual cosmos, comprised of numerous CIDs. When you upload a new file to the IPFS network, a unique CID is generated. This CID (hash) encapsulates all information about the respective file or folder. Whenever you make changes to your file or folder and attempt to upload it to IPFS, a new CID will be generated. Instead of a URL, you can share the CID with your colleague. If they use an IPFS client or a browser that supports IPFS, they can download it using their local client or one of the public gateways.

In my opinion, this is a very intriguing solution. Without knowing the CID, the content doesn’t exist for you or other users. With IPFS, you can share various things, including websites. Using this solution, we can build USENET 3.0—a public available place to exchange information. However, we need something that will traverse this never-ending space, gather information, and present it in a human-readable form.

Mathijs mentioned that ipfs - search com is based on an Elasticsearch cluster with an index of approximately 22TB. Managing such a substantial amount of data posed a significant challenge.

The question is, does YaCy with properly implemented distributed search engine model will work better ?

Do you see any possibility of handling this size of data, which will likely increase over time, with a distributed search engine like Yacy? Do you believe that it would be technically feasible to implement such a solution - IPFS crawler + IPFS search engine in decentralized YaCy ?

Cheers

Konrad

Hi,

and is there some indexable text in a particular CID? And links to other CIDs?

(trying to find something, what resembles www, for which, yacy is crafted)

Would be interesting, but probably the single way to use yacy is to clone a

fork and start experimenting. And document the experiments. Java knowledge

probably neccessary. But just changing URLs for CIDs and to implement some

parsers wouldn’t be such a big deal for experienced coder.

Use a network definition file (other than default ‘freeworld’) to make a

separate network.

My personal YaCy node is just half TB, so I got no experience in handling so

big data. Maybe largest nodes like @sixcooler or megaindex do have several TBs

and you can ask them.

While using external solr i touched some limits on numbers of entries –

probably solr cloud should be used instead of solr. The biggest performance

bottleneck is a RWI database in my case. Which can be re-defined, tuned,

switched-off etc.

And crazy idea: if you know the people from the closed ipfs-search – don’t

they want to open their source codes, while their bussines is over? ;-]