Hello everyone,

I have an local index with websites most dear to me and that I regularily recrawl to keep the local index up to date. I’d like to integrate remote search results in the search but if I enable this, my index gets immediately polluted with lots of irrelevant URLs and the storage usage exceeds the system resources.

Is it possible to enable remote search but prevent the pollution of the local index?

Or as alternative: crawl to different collections (local → persistent, remote → clear regularily)?

Best regards

Chris

Hi, Chris!

Not sure, but you could try setting:

remotesearch.result.store=false

https://eldar.cz/yacydoc/operation/yacy_conf.html#remote-search-details

Also not sure, whether the remote search results are stored as RWI or in local solr. In the first case, deleting RWIs from time to time could help.

1 Like

It’s also in GUI: Search Portal Integration > Portal Configuration > Index remote results.

add remote search results to the local index ( default=on, it is recommended to enable this option ! )

But I’m not really sure, what that does… Will yacy show the remote results after unchecking?

1 Like

Hi okybaca, thank you, yes this does the trick! YaCy shows remote results but the local index remains unchanged

Edit: To early, this works only on a junior peer. When I activate it on my senior peer I still get URLs via incoming DHT transmissions… I can delete them via collection ‘dht’ but this is probably not intended as the incoming transmissions will start again.

I’m not quite sure, how exactly the remote search works. I thought it always uses DHT for transfer of the results (maybe only single-word queries?). But maybe it uses remote solrs as well? Really not sure.

Probably, seeing the code would help understand, how that works…

And @orbiter would know, for sure.

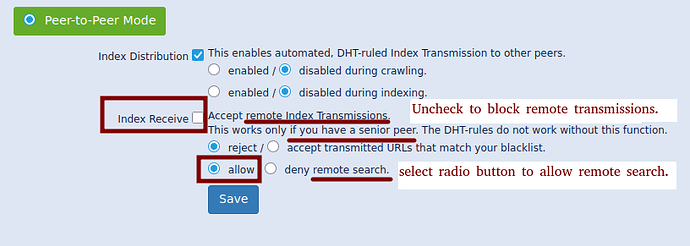

To be in control of what content is indexed on your peer, but also have the ability to perform a remote search, this works for me…

Append this to your Yacy URL:

/ConfigNetwork_p.html

Example: http://141.98.154.239:8090/ConfigNetwork_p.html

Configure your Peer-to-peer Mode:

Uncheck “Index Receive”, but allow “remote search”.

1 Like