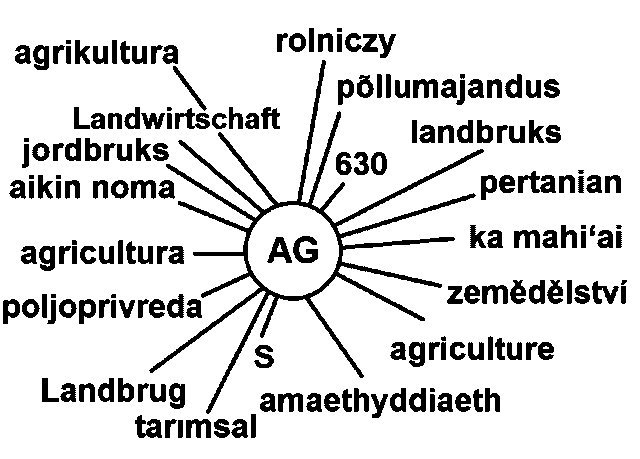

What does “the semantic web” mean? To me it simply means searching, spidering, indexing and serving web pages by concepts rather than words in any particular language.

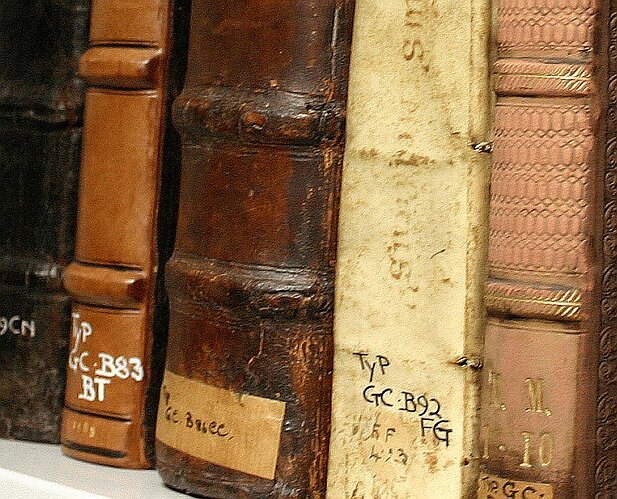

A simple and fairly well known example of this is most, if not all, public library book indexing systems.

Take DDS, the Dewey Decimal System.

In the DDS system, as an example, the number 006.3 represents the concept of Artificial Intelligence in computing. This conceptual representation cuts across language barriers and eliminates ambiguity.

So why not something like: (angle bracket)meta name=“dds” content=“006.3”(close bracket) ? as metadata for a website about artificial intelligence and why not have search engines that can read such conceptual metadata and index and serve up websites accordingly ?

Well, the number one reason is probably that OCLC (Online Computer Library Center, Inc.) which maintains and keeps the Dewey Decimal System up to date seems, in the past to have been a bit litigation prone. https://www.nytimes.com/2003/09/23/nyregion/where-did-dewey-file-those-law-books.html

Well, there are other conceptual classification systems, the Library of congress for example uses the code Q334-342 for the same concept, (Artificial Intelligence).

Maybe, but it is rather cumbersome and limited. Dewey had 10 primary categories represented by the numbers 0 through 9. So, can we count the categories of all the diverse information on the web on our fingers? That’s another reason not to use the Dewey system. Well The LOC system uses A through Z. Again, can all the information on the web be categorized by just 26 categories? I mean 21. For some reason, or no reason,the entire alphabet is not utilized. Can all human knowledge and information be divided into such a limited number of basic concepts?

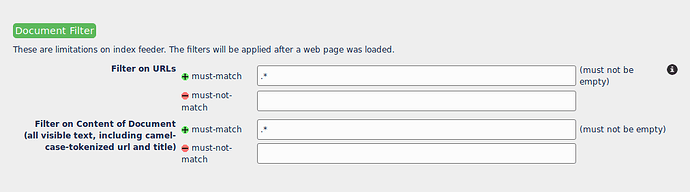

There is one library conceptual system that might work for the entire web, with some modification. Colon Classification of S. R. Ranganathan or some similar faceted classification system https://en.wikipedia.org/wiki/Faceted_classification.

But the conceptual categories or facets of the internet far exceed anything imagined by Ranganathan… Libraries of books do not have any such thing as an IRC chat room or internet message board or blogs or Live streams or shopping carts or auction sites or upcoming event calendars or social networks etc. etc.

A faceted classification system, nevertheless packs a maximum amount of metadata into an incredibly compact form. Basic information like subject, time, location, use/purpose, rank: importance, urgency, and more can be represented by a rather concise string of characters in a way that is both computer readable and unambiguous.