add the ability to search for anything but porn and violence ![]()

Most of my search keywords do not result in unwanted lustful images, but I recognize that the internet is full of it. I don’t know what you consider “violence”. While I rarely see lustful images for my keyword searches, I often see unwanted propaganda and lies, but how could we filter out all these unwanted things from our search results? The filter should be your own discernment, or else the filter could easily be turned into censorship. Do you want a stranger deciding for you what you should see or not see?

If something comes up that you do not like, don’t click on it, look away, or close it. But as long as you are in this fallen world, you will be submitted to temptations. This is your opportunity to strengthen your will power, to reject those things you do not want.

It probably depends on the quality of indexes on peers in the network. I must admit I don’t use the p2p index for search at all, finding it useles. But at the same time you have the oppurtunity to balance that with your own published index. Fair game.

Not a surprise, that words banned in the other search engines, are the stars of non-censored one ![]()

Hi @vBGgHuYtgGgT and others facing similar issues,

I ran into the same problem a while back with unwanted adult content indexing in YaCy. To tackle it, I started maintaining a custom blacklist that blocks some known porn domains. It was originally generated from a domain export off one of my peers about two years ago.

![]() Here’s a download link to my current blacklist:

Here’s a download link to my current blacklist:

http://cloudparty.evils.in/index.php/s/xy4gxRCebBEPNsZ

You can also find it available via my peer: agent-smokingwheels.

Nice!

Idea: what about a github repository with shared blacklist? Advantage: possibility to fork, patch & collaborate.

@okybaca Shared on github is a good idea for small lists.

I am currently testing a list with over 1 million but it slows the search down a little bit so there is a trade off. I have not tried crawling yet.

I do a domain export and use a QB64 program to pick off key words in the domain names to create a list.

Still experimenting and testing.

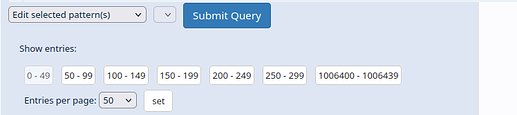

Its impossible to add a large list thru the current user interface because you have to preview all the domains first to be able to add all at the base of the blacklist page.

I have found a work around if you overwrite the file DATA/LISTS/url.default.black yacy will load all of them automatically. I have a QB64 program to add the extra “/*” to the domain list. (if that is the correct syntax)

do we feel this appropriate to use a blacklist? doesn’t that sort of jeopardize the quality of the index we’re pulling? or is the blacklist limited to searches on the network?

I take your solution to mean you’re depriving the index of those things, whereas the solution being requested is more a “safesearch”. Is this just a workaround for now? Or am I misunderstanding. I am trying to learn how to participate more heavily so I might ask too many questions lol. The hinge point is if OP, God forbid, was feeling lustful or violent, and this search engine was agnostic of morality - and I’m not suggesting anything about the morality of porn or violence, I think that’s well tread in human history.

Is this not more of a post search refinement step? I guess that would interfere with pagination, in the case the API calls are also segmented by pagination, because filtering results, would leave you with less than X results from page if of your Y total results, say, 5 are porn. You’d end up with X=10-5=5 results, interfering with pagination.

@slyle

You’re right to raise this—it’s a nuanced question with both technical and ethical considerations.

On blacklisting (e.g. teen porn, ads):

Using a blacklist like DATA/LISTS/url.default.black in YaCy isn’t just about content filtering for individual users—it affects what gets crawled and indexed, and potentially what gets shared across the distributed network. That said, in my case, it’s my peer—and I have the freedom to block anything I like from being processed locally. I can still share my curated index with others, but I’m choosing what I contribute to the network. That’s one of the strengths of a decentralized search engine: each peer is in control of its own data policies.

This approach is a practical workaround for now, especially since the current web UI makes it difficult to bulk-import large domain lists without previewing each one. Overwriting DATA/LISTS/url.default.black directly allows automatic exclusion. I even wrote a QB64 tool to clean and format domains (e.g. appending "/*" where needed) to speed things up.

In summary:

- Blacklisting at the crawl and index stage gives each peer full control over what it processes and contributes. It’s not universal censorship—just local autonomy.

- I blacklist domains because I want control over what my peer indexes and shares—and this method works well for tuning my local results.

Appreciate the thoughtful conversation—these are the kinds of discussions that help shape a better decentralized search ecosystem.

Sounds pretty pragmatic to me. I am still getting used to the P2P aspect of YaCY and did not think about the reverse. With a tool as wide as this (and integrations galore, and portal mode, and searXNG, and the P2P and decentralized aspect), I imagine it will take me many moons to internalize all the motivations for this tool. I initially just started because I really like the idea of only crawling things I want in an engine. I am about to hop to the docs, but if there is a discord server or if anyone is interested in collaborating on these discussions and “art of the possible” especially in a new AI paradigm, which makes YaCY imo THAT much more powerful I can share my username or something if within forum rules (think of combining it with Retrieval Augmented Generation, and intranets for businesses of all scales in a click of a button!!!)

OH ALSO. have you had any success with post search displays where pagination is in effect?

it has been a massive confusing pain for me, because the API call, before filter, has to also be made in chunks. i suppose as I write this, I could just request 2x or some multiplier that reflects the nature of the user filter restrictions (its not the worst to show 8 here, 10 there, 5 here, it just looks unprofessional. This is unrelated to YaCY but indirectly related as I’m working on a huge searXNG personal tool (see other forum post, if you have experience with docker PLEASE lol help).

blacklists are individual for every node, so the diversity is assured, still. someone wants very narrow or ‘safe’ search, someone else wants everything.

i personally try to keep my index clean and neat. i don’t need facebook pages offering me just log-in, indexed a million times, so i blacklist facebook.com. there is a plenty of spam on the web, easily available to crawlers and filling the local index. i delete a lot from my index.

even when crawling news sites, i ommit index/category/tag/author pages, to keep only the articles, because they contain the information, not the indexes.

what are yours indexing strategies?